Voice User Interface Design New Solutions to Old Problems

Voice User Interfaces — 15 challenges and opportunities for design

![]()

If you've ever rolled eyes over the responses you got from Siri, Alexa, or Google Assistant, you probably wondered what makes it so difficult for voice technology to get things right. Is it so difficult to design the right thing and design the things right when it comes to Voice interactions?

Having been part of the Voice User Experience des i gn team at Samsung, I picked up some learnings about this field and would like to share my two cents on some challenges I faced as a VUI designer and some challenges the field of Voice UX design needs to tackle.

Note: Voice Assistants are abbreviated as 'VA' or (loosely) referred as AIs.

1. Breaking ice with an AI?

What an AI can understand is a big question mark. Does it only talk about weather, news, calls, playing music, setting alarms, etc. Do I know as a user what all I can expect, for example about sports — just the score? match status? or table of standings too? News, how much news? what types of news? much details or just headlines?

What can you say after you say the base command, example, "What's the weather today?" shows the weather card, tells the temp, then what? Can I ask "How about tomorrow?", "How does it look all week?", "Will it rain any time this week?" The thing is, many of these technologies can answer most of the usual follow-up queries, but not much has been done to educate the users what more is possible . Same is the problem with chat bots — if not designed well, it is unclear what subsequent conversation can the bot make.

2. Conveying what the assistant cannot do

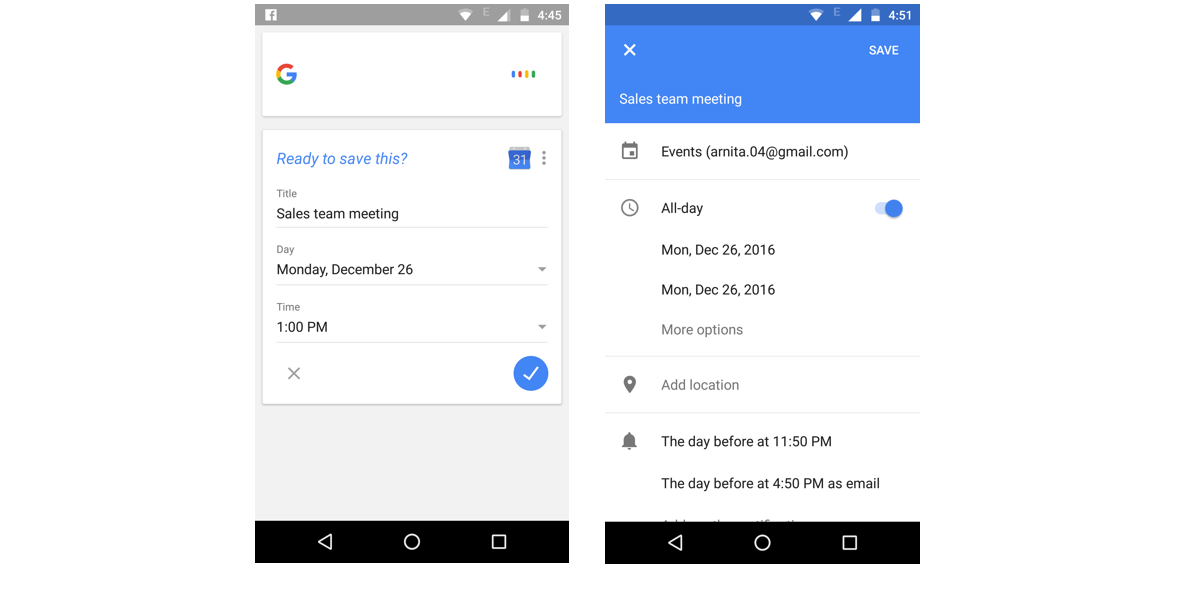

Just as the last point suggests to convey to users what all is possibly understood by the VA, it is just as crucial to convey what is not possible. For instance, if you use Google assistant to set up an event saying "Create an event called Sales team meeting for Monday 1 o'clock" it creates an event card (below left) and asks "Would you like to save this event?"

But you suddenly remembered that this time there's a change of venue for team meeting, and you say "Set the location to Gates center" (coz you can do it in the app- right), the assistant replies "I'm not sure about what you said, would you like me to save this event?" Dang!

What can be done? —A graceful exit strategy. Since the above mentioned is a likely utterance that user can state with regards to setting up event, let them know "Sorry, adding locations is something I'm still working on". That way, users don't feel they asked something absurd.

3. Visibility of system status

How to communicate system status to users? This Usability heuristic suggests that users must be aware of what's happening in the system, example progress bars show how much of the file has been downloaded. In Voice UIs users state a command and then wait for an action to be taken. At this point if they aren't communicated properly about what is happening, they can feel puzzled as to what's going on.

An array of bouncing dots in Google assistant, Echo's lit up bezel, Siri's dynamic waveforms communicate that the system is 'processing'. But in mediums where screen interfaces are not (or rarely) involved, example, headsets, cars, home (Echo, if I'm not looking at it) this communication about processing/looking up needs to be made more clear. Assistants reply quite fast, but in case processing takes time, it might help to communicate it's still processing via audio feedback.

4. Error correction

A classic problem of talking to VAs is when they hear you wrong. And then there's no way to correct them, except to type in if there's a screen interface or give the command all over again (which usually doesn't happen and results in user's abandonment).

If you dictate a message to a VA and you say "Text John I'm running a bit late. Could you fill me up on the presentation?" and VA responds "Here's your message to John… I'm running a bit mate. Could you fill me up on the presentation" Now, what? It's frustrating. How do I correct the misspelt word. If I could've typed, I would've texted myself. But there's coffee in one hand and laptop in the other.

What can be done? — It may not be possible to recognize with 100% accuracy and errors are bound to happen. But UX could make making corrections easy. What if you could say, "No I said I'm running a bit 'late'" and it makes the correction. In fact, more could be done. "Naah! remove that part about 'filling me up' and add Will join the conference call in 10". I'm sure such enhancements are underway though. Users could then be forgiving about having to error correct typos in exchange for the ability to go handsfree once in a while.

5. Even better, error prevention

Actually in light of the previous point another usability heuristic, preventing errors altogether would be great. Google assistant does a fabulous job at preventing errors. I tried to trick it, but…

6. Repeated activation

Voice technology is anthropomorphized. It's either a male or female voice, a persona builds in our minds when we talk to Siri or Alexa. And to invoke these assistants, we need to state an activation command like "Hey Siri", "Ok Google" or "Alexa", so they sort of 'wake up' and 'listen' to what you have to say.

However, when we talk to each other, we don't say "Hey John, would you like to grab a coffee?" and then "Hey John, let's Uber to Starbucks". It's just plain awkward. So is having to say "Hey Siri" or "Ok Google" every time we want to state an utterance. It's not a deal breaker, but it does affect the experience of interacting with the AI.

What can be done? — Now we can't have them always listen for reasons of battery conservation and more importantly, our privacy. But perhaps the assistants could keep listening for a little while longer once a conversation starts, like how we humans do when we talk. We say a thing, the other person replies, then that person doesn't walk off (of course if no one was nasty), they wait to hear what you have to say next and keep the conversation alive. This is key. Assistants need to give humans the chance to keep the conversation alive. Not just turn off the mic once a task is done :/ Perhaps, an ability to authenticate using little non-lexical ways of conversing, like "umm.." "uhh.." can help achieve this.

7. Tradeoffs — Shorter dialogue flow or accuracy of user intent?

One of the things Voice UI designers always try to do is how to accomplish a task with the shortest dialogue flow. Often in this pursuit, accuracy of understanding user's intent gets affected. Example, if you say to your phone "Call Jason" and you had two contacts called Jason Wang and Jason Matthew, what should the VA do?

A little background here… Jason Wang is a yesteryear friend from college who you haven't talked to in a while and Jason Matthew is a colleague from work. So should the assistant ask "Which Jason did you mean — Jason Matthew or Jason Wang" and wait for you to reply, or should it straightaway call Jason Matthew and risk being wrong but making the task usually faster? I would go with the second and then make it easier to disconnect for when it's a wrong guess. But we're not designing for ourselves.

What can be done? — It is hard to say what users would actually find useful and privacy secure. It becomes very important to then quickly prototype interactions and get tested with users. This brings me to my next point.

8. Difficult to prototype and test

When we prototype GUIs or paper mockups of concepts and test those with users, there are only so many things that the users can do. They may click the button you want them to, go on a tangent or abandon the task altogether. But in Voice UI, if you design a user test for a set of dialogue flows and give the initial prompt "You can try to order some groceries you're out of with our voice assistant", users can say in any number of ways "I want to buy…" "I need…" "Can you please send me…" etc. etc. How will you test a prototype without the whole technology already in place!

One way we used to test Voice UIs within the design team was by role-playing user and voice agent to hear the dialogues. This was an effective way to check how the experience sounded. But no denying the importance of usability testing.

Lately some prototyping tools have been designed for testing Voice UIs. If you've used any tools to test voice assistants with users at a 'prototype' level, please share!

9. Design patterns, ouch

There are almost no set design patterns to beg borrow steal in Voice User Interface design. Though there are some good reads out there. Amazon has put together a great documentation for best practices in Voice UI design. If you've come across some good resources, please share. Thanks!

10. Voice UI design documentation

Voice User Interface design encompasses a wide range of UX outcomes, from Voice agent personality creation, to Dialogue/UX principles, User scenarios and what is called Dialogue management viz. agent-user dialogue flows.

Unlike set mediums to create and collaborate for UI designs, usually Voice UIs are documented in dialogue flows. These flows document possible user utterances and assistant responses, generally in a comprehensive manner so as to cover all possible scenarios of conversation with the voice assistant. This can be quite a challenging task.

11. That feeling… can I possibly think all?

As a VUI designer, one of my key contributions was to arrive at as many scenarios as possible while designing the Voice UI layer over and above the existing smartphone experience. Example, I can use Maps application to find locations, addresses, route/traffic info. But if this was to be enabled by Voice interaction say for driving scenario, what would users most likely say?

What would users ask, "Navigate to Starbucks on Forbes avenue", "Navigate to the nearest Starbucks" or "How does the traffic look like on Forbes?", "How long will it take to the nearest Starbucks?", "Are any Starbucks around open?" or perhaps in the middle of the journey "What's my ETA?".

To confess, this involves some head churning, often leaving behind the feeling — am I going to possibly think ALL user scenarios. Most likely, No… but most of the viable ones? Yes. And then, apply user empathy. When will users ask for ETA? Should they have to ask for ETA? Is there a medium other than Voice, to better communicate how far they've yet to go? These are decisions that can be best taken by UX designers.

12. Regionally well-versed assistants

Sitting in Bangalore, testing out assistants designed for North American markets, I felt bad about my accent sometimes. Even Siri wouldn't know my name for example if I'd say, "Siri, call Arnita" (uncommon name in India), it would bring up random matches from the phonebook and rarely if ever catch the name correctly.

Though in one other instance, Siri did ask "Can you help me learn how to pronounce Abhilash" (a friend's name, never mine-d) and that was pretty smart. Google assistant does a good job at mimicking the accents and perhaps user's accent understanding is underway. Also, to add delight what if assistants could answer with regionally used phrases or idioms?

13. The social awkwardness

No matter how far we've come with voice recognition accuracy and are seeing examples of potential use in our daily lives, the awkwardness of using these assistants 'socially' still remains.

Well, it was awkward initially when people had started using earphones that disappeared behind caps and hoodies, making others wonder is that dude talking to himself? Now we just assume, they probably are. Possibly in future that's what will happen with assistants in our pockets too.

14. Niche use-cases are hard to find, but rewarding

Alexa, actually Echo won it here. Home is a place where users can try Voice technology and fail. They're either surprised or have a good laugh about it. Plus, Alexa is really smart too! What needs to be done is to think of scenarios where Voice is a 'need', not just another modality.

15. Designing Voice UIs is nascent too!

Rest assured, that as novel as these technologies (good voice recognition, natural language understanding and any of the subservient ones as Machine learning or AI) are, so is the practice of designing user experience for them.

If designed well, Voice UIs have good use-cases to make. Like all design, it's about picking the right cases and designing them right.

I understand at some points in this article you were probably wondering, "Well, do I really need to use voice — I could just pull out my phone, tap and type the things in!" But sometimes, our ability to interact via touch is compromised, example, while on a jog or while cooking, or a recent one I discovered, when its too cold in Pittsburgh to remove gloves to use your phone.

Arnita is working with Microsoft as part of Cortana and AI team after pursuing her Master's in Human-Computer Interaction at CMU. She loves to discuss UX, especially UX for intelligence and, food. Get in touch!

Voice User Interface Design New Solutions to Old Problems

Source: https://uxdesign.cc/why-is-it-so-difficult-to-use-and-design-voice-uis-87f2976aa796

0 Response to "Voice User Interface Design New Solutions to Old Problems"

Post a Comment